GazeInterpreter: Parsing Eye Gaze to Generate Eye-Body-Coordinated Narrations

Qing Chang, Zhiming Hu

Proceedings of the AAAI Conference on Artificial Intelligence, 2026.

Abstract

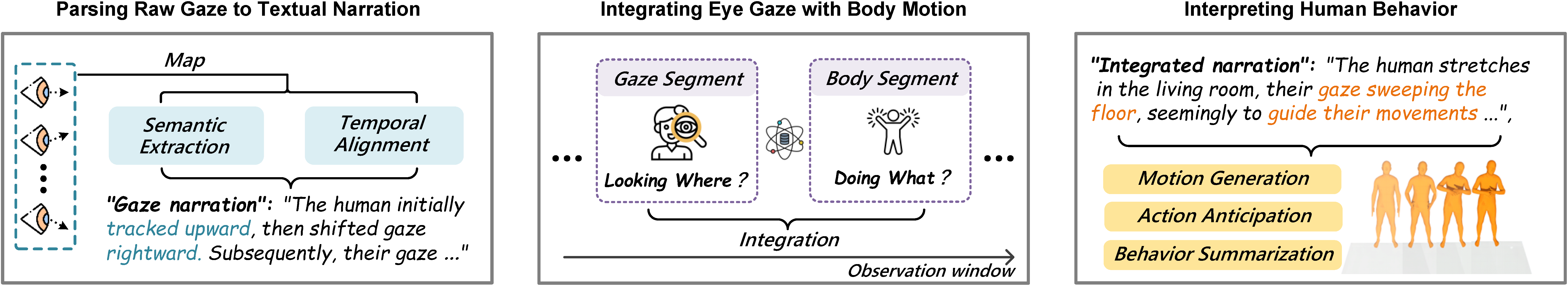

Comprehensively interpreting human behavior is a core challenge in human-aware artificial intelligence. However, prior works typically focused on body behavior, neglecting the crucial role of eye gaze and its synergy with body motion. We present GazeInterpreter – a novel large language model-based (LLM-based) approach that parses eye gaze data to generate eye-body-coordinated narrations. Specifically, our method features 1) a symbolic gaze parser that translates raw gaze signals into symbolic gaze events; 2) a hierarchical structure that first uses an LLM to generate eye gaze narration at semantic level and then integrates gaze with body motion within the same observation window to produce integrated narration; and 3) a self-correcting loop that iteratively refines the modality match, temporal coherence, and completeness of the integrated narration. This hierarchical and iterative processing can effectively align physical values and semantic text in the temporal and spatial domains. We validated the effectiveness of our eye-body-coordinated narrations on the text-driven motion generation task in the large-scale Nymeria benchmark. Moreover, we report significant performance improvements for the sample downstream tasks of action anticipation and behavior summarization. Taken together, these results reveal the significant potential of parsing eye gaze to interpret human behavior and open up a new direction for human behavior understanding.Links

BibTeX

@inproceedings{chang26gazeinterpreter,

author = {Chang, Qing and Hu, Zhiming},

title = {GazeInterpreter: Parsing Eye Gaze to Generate Eye-Body-Coordinated Narrations},

booktitle = {Proceedings of the AAAI Conference on Artificial Intelligence},

year = {2026}}